In my previous post I had a TSQL script to move the Adventure Works DW database to current dates. The second idea was to create attribute members that point to CurrentDate, CurrentMonth and Current12Months. The reason for this is that named sets in BISM Multidimensional(BISM MD) are not supported in Tabular and Power Pivot. Named Sets are also not part of the DAXMD that integrates Power View for SharePoint with an external BISM MD model. So why not try to create attributes that might succced Named Sets?

Create the Current Members With TSQL

From my previous blog post I have made a little change and added distinct to the view. The reason is that we had a leap year in 2008 that will create two copies of 2013-02-28. One from the real date and one from the leap year date. Here I move the date dimension five years forward.

CREATE View [dbo].[DateDimensionUpdate]

As

(

Select Distinct

DATEADD(YEAR,5,FullDateAlternateKeY) as FulldateAlternateKey5Y,

DATEDIFF(MONTH,DATEADD(YEAR,5,FullDateAlternateKeY),GetDate()) as MonthSequence,

DateName(m,DATEADD(YEAR,5,FullDateAlternateKeY)) As MontName,

DatePart(m,DATEADD(YEAR,5,FullDateAlternateKeY)) As MontNbr,

Year(DATEADD(YEAR,5,FullDateAlternateKeY)) as Year5,

Year(DATEADD(YEAR,5,FullDateAlternateKeY))*100 + Month(DATEADD(YEAR,5,FullDateAlternateKeY)) As YearMonth5,

Year(DATEADD(YEAR,5,FullDateAlternateKeY))*100 + Datepart(qq,DATEADD(YEAR,5,FullDateAlternateKeY)) As yearQty5,

Case When DATEADD(YEAR,5,FullDateAlternateKeY) = Convert(varchar(8),GetDate(),112) Then ‘Y’ Else ‘N’ End As CurrentDate,

Case When DATEDIFF(MONTH,DATEADD(YEAR,5,FullDateAlternateKeY),GetDate()) = 0 Then ‘Y’ Else ‘N’ End as CurrentMonth,

Case When DATEDIFF(MONTH,DATEADD(YEAR,5,FullDateAlternateKeY) ,GetDate())between 0 and 2 Then ‘Y’ Else ‘N’ End as CurrentThreeMonths,

Case When DATEDIFF(MONTH,DATEADD(YEAR,5,FullDateAlternateKeY) ,GetDate())between 0 and 5 Then ‘Y’ Else ‘N’ End as CurrentSixMonths,

Case When DATEDIFF(MONTH,DATEADD(YEAR,5,FullDateAlternateKeY) ,GetDate())between 0 and 11 Then ‘Y’ Else ‘N’ End as Current12Months,

Case When DATEDIFF(MONTH,DATEADD(YEAR,5,FullDateAlternateKeY) ,GetDate())between 0 and 23 Then ‘Y’ Else ‘N’ End as Current24Months,

Case When DATEDIFF(MONTH,DATEADD(YEAR,5,FullDateAlternateKeY) ,GetDate())between 11 and 23 Then ‘Y’ Else ‘N’ End as Current12MonthsLY

From [dbo].[DimDate]

)

The second view is required to move one of the fact tables 7 years ahead.

Create View [dbo].[FactInternetSalesUpdate]

AS

(

Select

CustomerKey,

ProductKey,

DATEADD(YEAR,7,OrderDate) As OrderDate,

DATEADD(YEAR,7,ShipDate) As ShipDate,

DATEADD(YEAR,7,DueDate) As DueDate,

OrderQuantity

from [dbo].[FactInternetSales])

I assume that you know how to add these views to a new BISM MD project.

The BISM MD model

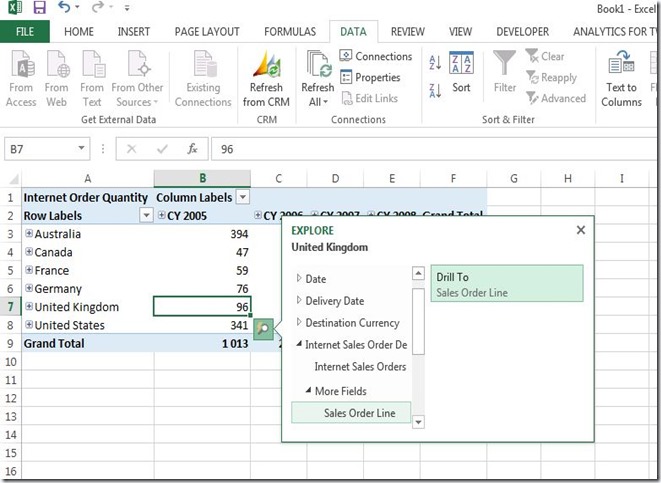

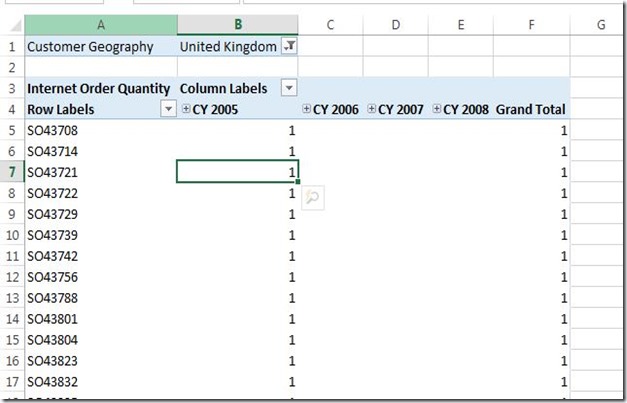

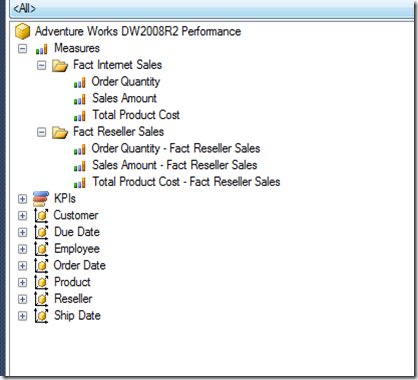

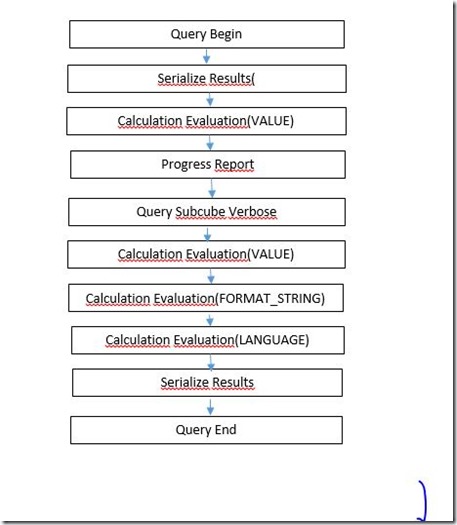

Here is the structure of the model that is a simplified version of the Adventure Works cube with only one fact table and with only Order Quantity as the fact table measure.

Here is the dimension usage tab for the cube.

The Date Dimension

Here are the attributes and a hierarchy. I started with this and added the attribute relations for the hierarchy. I assume that you know about how to map each attribute to type properties and value column properties.

The Mistake

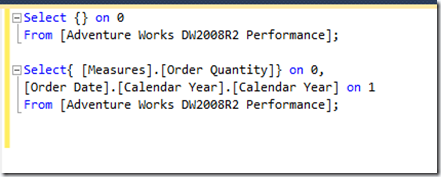

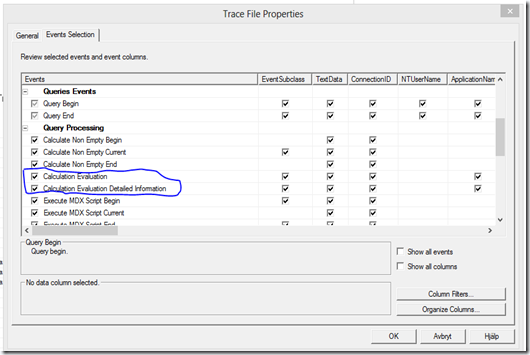

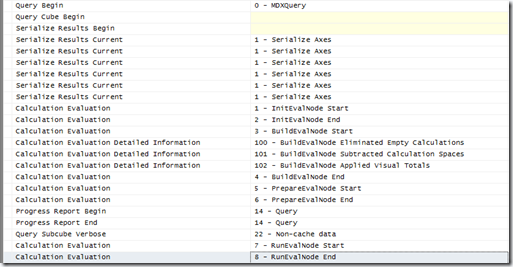

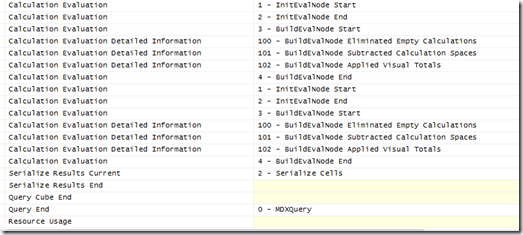

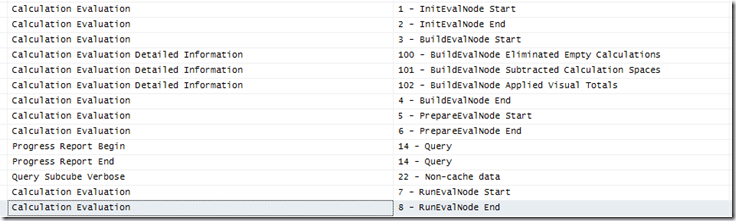

After building the cube with the dimensions and the simple fact table I thought I was close to a working solution where I can use the current flags to do selections of YearMonth members and that MDX calculations will work. I started in Management Studio to create some session based calculations with time intelligence.

WITH Member Measures.ParallelPeriodOrderQty AS

SUM(ParallelPeriod([Order Date].[Year Month5].[Year Month5],12,[Order Date].[Year Month5]),[Measures].[Order Quantity])

Select {[Measures].[Order Quantity],Measures.ParallelPeriodOrderQty} On 0,

[Order Date].[Year Month5].[Year Month5] On 1

From [AdventureWorksDW2012Simple]

Where ([Order Date].[Current12 Months].&[Y])

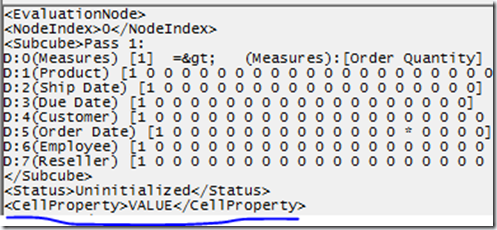

The problem with this MDX query is that it works fine with all parts except if you include the slicer part in the Where clause. The ParallelPeriodOrderQty will be null with slicer. The correct months will appear on the row axis however. So what did I forget to add in the cube?

Attribute Relations

Remember the date dimension picture above. We had a hierarchy and the matching attribute relations for the hierarchy. Here is what solved my problem. The solution was to add the current attributes as attribute relations to the Year Month5 attribute as you can see in the picure.

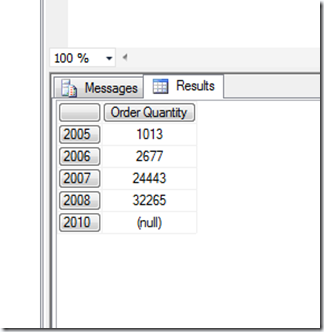

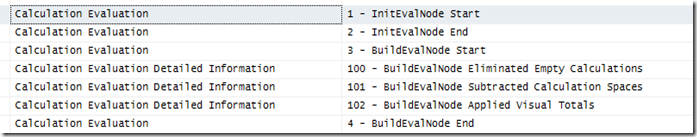

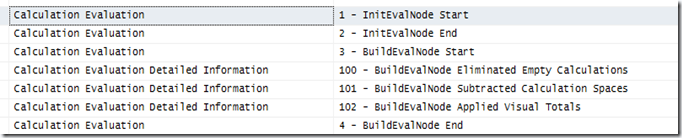

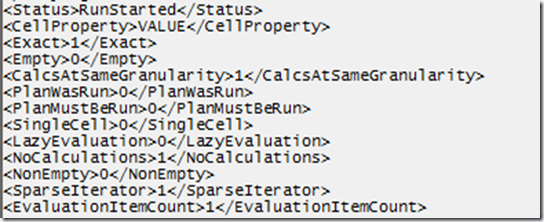

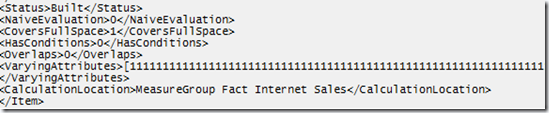

After adding these attribute relations to the date dimension I runned this query with this result:

Problem solved. Attribute relations are very important in BISM MD. Also you have dynamic flags that will update each time you process the cube and you do not have to use named sets. This solution will also work in Power Pivot as you will se in the next blog post.